Your Guide to Fact-Checking Generative AI

4 March 2024

The legal world’s excitement over the research potential of ChatGPT was tempered in 2023 by a spate of judicial warnings about the dangers of ChatGPT’s inaccuracies and hallucinations. The judiciary’s position is clear: lawyers must fact-check AI-generated submissions or face harsh penalties. It’s also clear that GenAI is far less risky when accessed through robust legal research tech – such as Vincent AI with its easy source-checking capabilities and vast dataset of non-hallucinated statutes, regulations, and cases.

Judicial Warnings Over Generative AI For Legal Research

Judges are wary of Generative AI (GenAI). In June 2023, US District Judge Brantley Starr released an order that required all lawyers appearing before him to file a certification attesting that they did not use GenAI to draft any part of the case filings.

Starr was understandably concerned about ChatGPT’s well-documented ‘hallucination’ problem. Just a month earlier, the judge in Mata v Avianca – a decision now so infamous in legal circles that it’s simply known as ‘The ChatGPT Case’ – had sanctioned a lawyer who used ChatGPT to help write a legal brief that was full of fake cases and citations.

And in the UK, concern over false GenAI outputs prompted the Courts and Tribunal Judiciary to release Artificial Intelligence: Judicial Guidance. The guide warns of risks such as fictitious cases, and advises judges to confirm with lawyers “that they have independently verified the accuracy of any research or case citations…”

The judiciary’s emphasis on verifying legal sources indicates that it’s not GenAI itself they object to (although Starr had some strong words about ChatGPT’s supposed moral weaknesses), but lawyers’ over-confidence in its outputs leading to hallucinations. Even Judge Starr’s draconian order allows AI-generated language in legal briefs as long as a human checks it against either print reports or traditional legal databases.

So if lawyers want to leverage the much-touted efficiency benefits of GenAI, they must review all court submissions that contain AI-generated content — checking not only the accuracy of quotes and analysis, but that each citation actually exists.

Won’t Fact-Checking Negate The Time Saved By GenAI?

Checking GenAI output takes some time, but it ensures that mistakes are minimized, and clients (and courts) can trust that your submissions are valid.

Most importantly, lawyers need to understand the crucial difference between (1) creating an Open AI account and putting legal prompts into ChatGPT (which led to most of the ChatGPT-related cases and admonishments in 2023) and (2) specialized GPT-backed legal research tech. The latter will draw its sources from real, frequently updated cases, statutes, and regulations (rather than just legal content in the public domain, and on Wikipedia), and should offer built-in verification capabilities that make fact-checking quick and easy.

Vincent AI: Source-checking Made Easy

Vincent AI is built on the philosophy that lawyers using GenAI (even Vincent AI itself) should reduce the risk of hallucinations and inaccuracies through this maxim: ‘trust but verify’. Vincent AI gives lawyers a high degree of certainty around the results they get by, first, making it easy for them to carry out verification, and second, guaranteeing that all the legal sources on which Vincent AI ‘runs’ are authoritative and reputable.

Vincent AI makes it easy to verify underlying sources

Unlike a lot of competitor legal research tools, Vincent AI doesn’t hide its ‘thought process’. Competitors are a ‘Black Box’; Vincent AI is a ‘Clear Box’. Lawyers can easily see how Vincent AI arrived at a particular result or document — making verification more meaningful and aligned with increasingly stringent judicial standards.

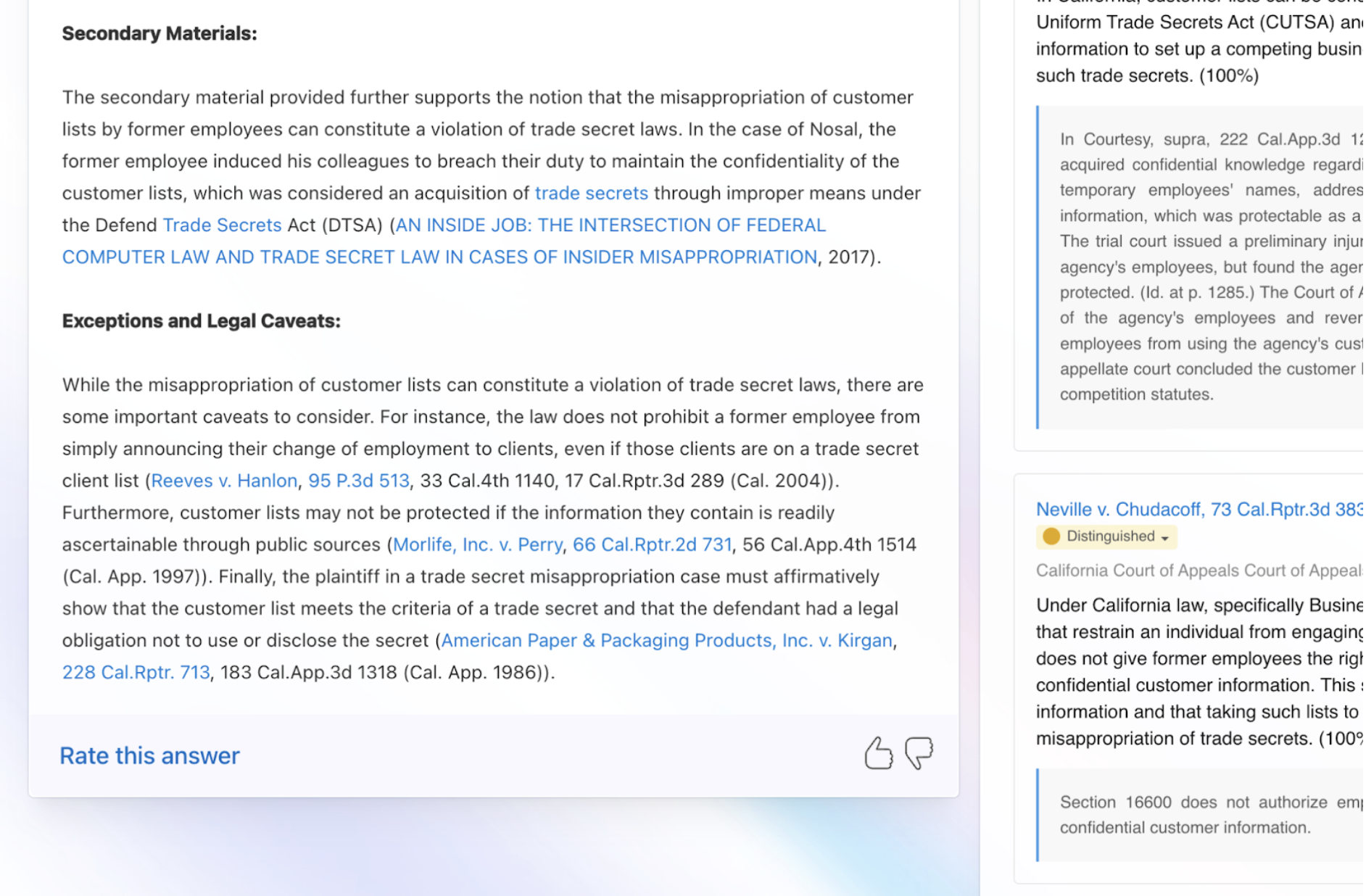

Below is an image where the right-hand side shows legal authorities, as well as that source’s most-relevant passage in a block quote. This makes ‘Trust but Verify’ fast and simple. Of course, you can also easily validate the source by quickly clicking through to the primary source. Also embedded in the Memorandum are links to each authority cited. Both allow for quick-fact checking.

Large Language Models are sycophants: They often tell users what they want to hear. Vincent AI counters that sycophantism through prompting: Each Vincent AI memo provides a summary of Exceptions and Legal Caveats. In this way, Vincent AI’s memorandum reflects not just what a user wants to hear, but what a user needs to hear.

Importantly, Vincent also allows you to modify the list of authorities considered so you have full control over which primary and secondary sources are included. With Vincent and its modifiable “Clear Box,” the user is always in control.

For added assurance, for each source (e.g., case, statute, regulation), Vincent AI also offers a useful ‘confidence score’. This score reflects how well a particular legal source answers the user’s question, with its particular legal nuances and facts. Shown at the end of the passages in the image below is a confidence score of 100%.

Vincent AI offers authoritative sources

Still, the ability to verify that a citation is appropriate might not be enough to eliminate inaccuracies. What’s needed (and what saves time) is trusted, authoritative source material at the outset.

This is one of Vincent AI’s great strengths: it draws from vLex’s global repository of reputable legal data and information, built up over decades. This dataset continues to grow — most recently with the addition of Docket Alarm’s collection of 800 million court filings.

Vincent AI Analyze Document reduces GenAI risk even further

There is an argument that lawyers have always had to take the time to fact-check legal submissions. It’s a valid point, but overlooks the fact that lawyers created those documents over a period of time — gaining familiarity with the citations and underlying law through the process of research, drafting, and redrafting. Simply printing out a ChatGPT-generated document doesn’t provide this sort of engagement.

That’s why the new Vincent AI Analyze Document can add another layer of protection against inaccuracies. As lawyers go through the steps of interrogating a document, they are essentially co-creating the result — deciding whether to ignore or use certain outputs.

And unlike ChatGPT, Vincent AI Analyze Document knows each document’s context, so lawyers don’t have to be prompt engineers. For example, is the uploaded document a Complaint or a Merger Agreement — and for each, what are a lawyer’s primary tasks for that particular document type? Vincent AI Analyze Document customizes its offered tasks. This is yet another step toward harnessing the benefits of GenAI while reducing the risk of hallucinations and inaccuracies that can otherwise put lawyers afoul of judges.

The devil is in the defaults. And Vincent AI’s defaults are strong: They allow users to “trust but verify” easily.

Related content:

On Legal AI, now available from the Fastcase bookstore by vLex. On Legal AI is perhaps the first fact-based attempt to map the territory between AI and the law. While grounding the conversation in hard theory, Joshua Walker takes the reader through a flurry of (interstitially entertaining) real-world examples to resolve on a single “recipe” for developing legal AI, and making AI “legal”. On Legal AI will aid readers in becoming far more productive and valuable to enterprise and government by specifically illustrating how to leverage practical legal automation, and how to avoid falling prey to its dangers. It seeks to marry the best mores and methods from law, computer science, and design—in time to catch the present wave of opportunity.

Learn more about On Legal AI, by Joshua Walker